About Me

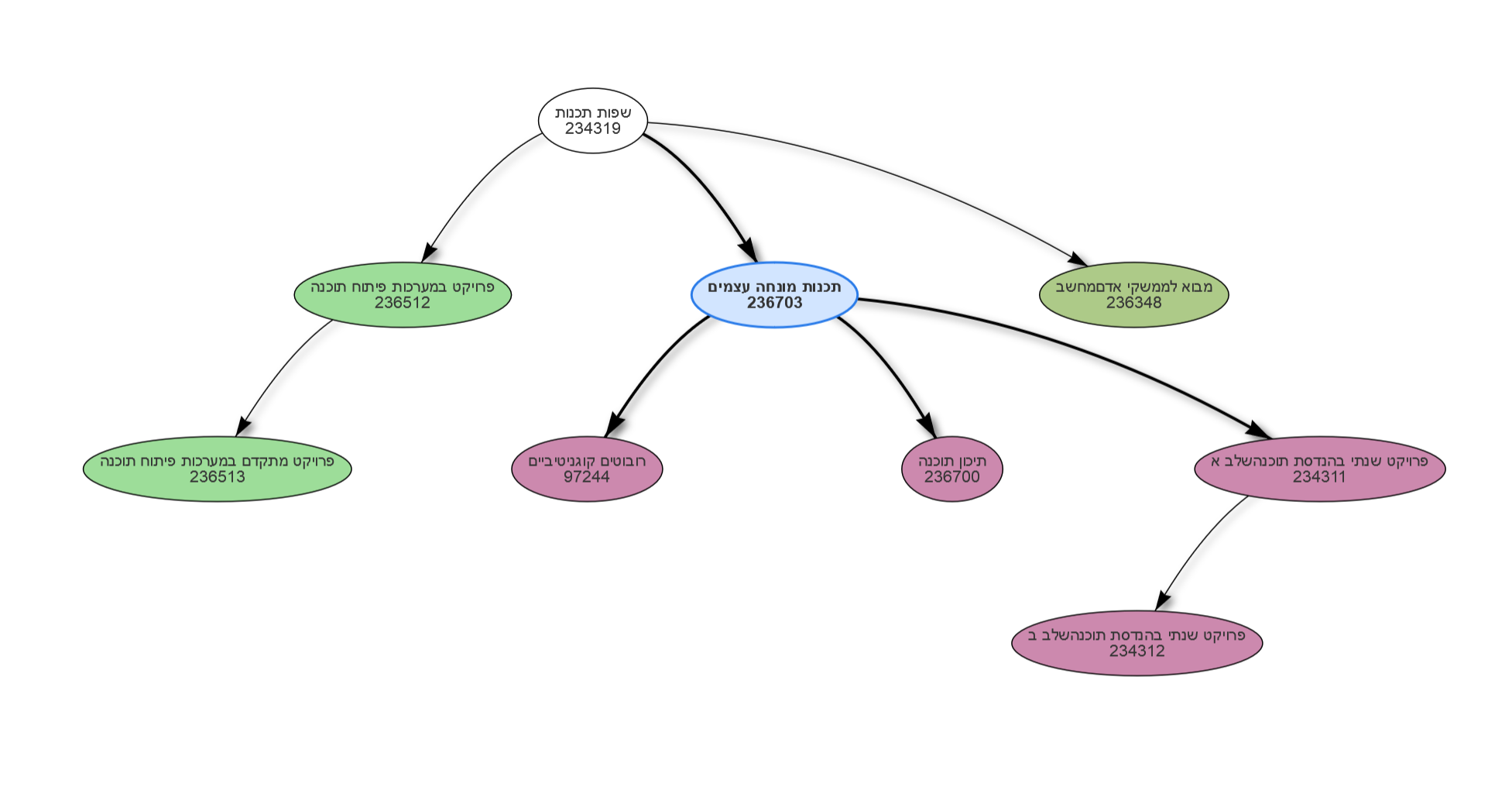

Ph.D., Compiler Engineer at Starkware

Father of two extraordinary beings and husband to the one who always knows best.

Master of my domain (when I'm home alone).

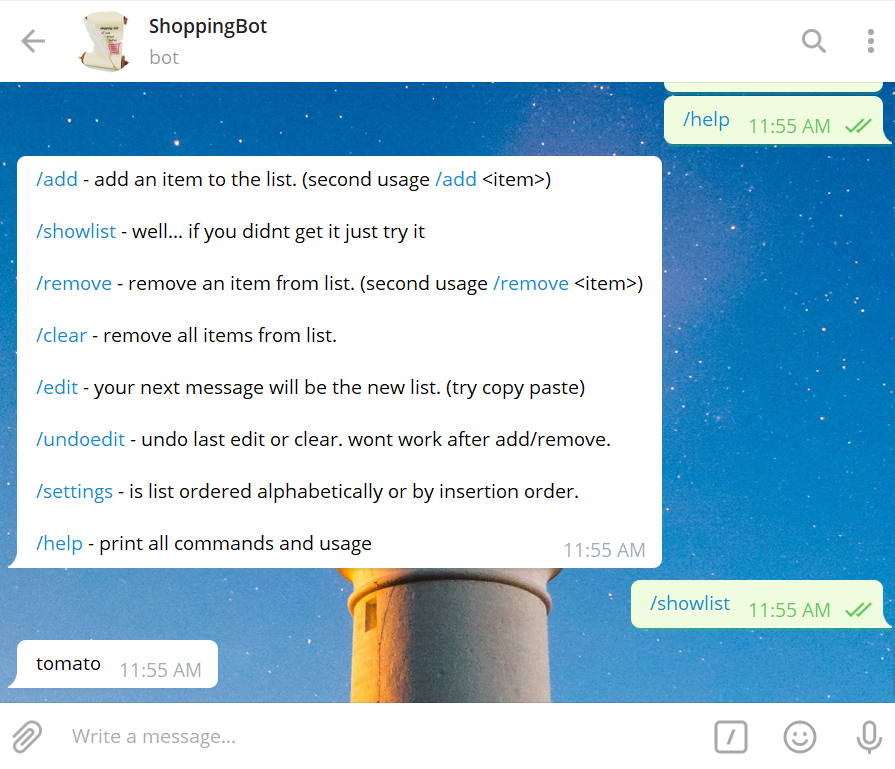

I really love to build. Whether it's a program, a website, a video game for my daughter, or even a bench for the lobby, each creation is a new source of joy. I also enjoy working on hard computational and mathematical problems, and to find an elegant solution.